Difference between revisions of "VALEP:About"

| Line 1: | Line 1: | ||

| − | VALEP was programmed by [mailto:maxi.damboeck@gmail.com Maximilian Damböck] and designed by [ | + | VALEP was programmed by [mailto:maxi.damboeck@gmail.com Maximilian Damböck] and designed by [https://homepage.univie.ac.at/christian.damboeck/ Christian Damböck]. It became launched in May 2021, e.g., on [https://dailynous.com/2021/06/01/virtual-archive-of-logical-empiricism/ Daily Nous], [https://leiterreports.typepad.com/blog/2021/05/valep-the-virtual-archive-of-logical-empiricism.html Leiter Report] and the [https://wienerkreis.univie.ac.at/forschung/valep-virtual-archiv/ IVC website]. |

== Hosts, supporters, and financiers == | == Hosts, supporters, and financiers == | ||

Revision as of 19:07, 6 June 2021

VALEP was programmed by Maximilian Damböck and designed by Christian Damböck. It became launched in May 2021, e.g., on Daily Nous, Leiter Report and the IVC website.

Contents

- 1 Hosts, supporters, and financiers

- 2 VALEP and Phaidra

- 3 Cooperation Partners of VALEP

- 4 The scope and mission of VALEP

- 4.1 Is it digital humanities?

- 4.2 Existing tools are document oriented and typically cover only rudimentary metadata

- 4.3 An archive oriented presentation might be helpful

- 4.4 The logarithmic scale of archival work

- 4.5 Adequate metadata are important

- 4.6 Documents might have instances (versions, chapters) being spread over different archival sources

- 4.7 A note on copyright

- 4.8 Keep material internal as long as the copyright issues could not be positively resolved

- 4.9 Desirable Features

- 4.10 VALEP offers them

- 4.11 Who can use VALEP?

- 5 Future prospects

Hosts, supporters, and financiers

VALEP is located at the server valep.vc.univie.ac.at, which belongs to the University of Vienna. It is operated by the Institute Vienna Circle, which also covers the running costs for the server. Further financial support came from the following sources:

- FWF research grant P31716: € 16,000 for programming in 2020 and 2021, € 2,000 for data processing in 2021

- The Vienna Circle Society: € 5,000 for programming in 2021

- FWF research grant P34887: at least € 12,000 for programming (2021-2023) plus approx. € 30,000 for digitization and data processing (2021-2023)

VALEP and Phaidra

In a future implementation which is projected for 2022 VALEP will also mirror its data in the University of Vienna's digital depository Phaidra. The staff and consultants of Phaidra already supported the development of the first version of VALEP; this included general questions of archival science (Susanne Blumesberger), questions of database design and the technical integration of Phaidra (Raman Ganguly), copyright issues (Seyavash Amini Khanimani), and all details of the metadata design of VALEP (Ratislav Hudak).

Cooperation Partners of VALEP

We are recently seeking cooperation partners among several international archives that house material on the history of Logical Empiricism. If you are interested in cooperating with VALEP as an institution or simply using VALEP for your own research and store the material that you collected in the archives please contact Christian Damböck.

Archives of Scientific Philosophy (ASP), Hillman Library, University of Pittsburgh

The Archives of Scientific Philosophy share their electronic resources with VALEP. This includes all scans of the papers of Rudolf Carnap, Carl Gustav Hempel, Richard C. Jeffrey, Hans Reichenbach, Frank Plumpton Ramsey, and Rose Rand being processed by the ASP. The material comprises about 30,000 scans and is already fully available in VALEP. We would like to thank Ed Galloway for his most generous support and Clinton T. Graham for transferring the files.

The scope and mission of VALEP

VALEP is an archive management tool that is intended as a platform for the history of Logical Empiricism and related currents.

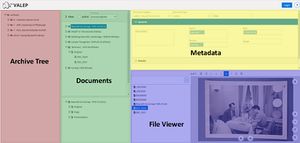

VALEP processes

- (left/red part of the window) the hierarchical structures of archives that include archives, collections, digitizations, shelfs, boxes, folders, files

- (middle/green part of the window) documents that process files of an archive into objects that belong to a certain document category, document type and become specified by means of metadata that include title, description, author, date

- (upper right/yellow part of the window) All archive nodes and documents are characterized by metadata that can be viewed in the upper right part of the window

- (lower right/blue part of the window) Files and documents can be watched in an integrated document viewer (already available) and they can be downloaded and printed (to be implemented in 2021)

VALEP stores titles, descriptions and the like as Unicode. But some metadata categories that include date, location, language, persons, and institutions are stored here via references in a relational database and/or using special formats and parsing tools, e.g., EDTF for data, and an internal tool for the mereological grasp of locations. See the metadata page for the details.

Is it digital humanities?

If one expects from a digital humanities project the adoption of sophisticated statistical methods of experimental research, then the answer is clearly no. Though the data pool being built by VALEP might in the future be used for the adoption of such methods, VALEP neither now nor in the near future is planning to integrate any tools for complex statistical evaluation.

On the other hand, VALEP is certainly aiming to collect large amounts of data. The history of Logical Empiricism, together with related currents such as Neokantianism, French Positivism, British Empiricism, and American Pragmatism, comprises of dozens of main figures and probably thousands of minor figures that include university and private scholars. The estates of many of these relevant figures are to be found in public institutions and private collections. Further material was collected by relevant universitarian and private institutions. There are thousands of manuscripts, publications, and probably millions of letters between representatives of the relevant currents that might be taken into account in one or another way, in our studies of Logical Empiricism. VALEP allows us to story any of these sources, as soon as we get them available in electronic form. Then, we can search them and filter them, in order to select the material that is relevant for us. This is, of course, also a variety of digital humanities.

Existing tools are document oriented and typically cover only rudimentary metadata

Existing tools for the management of archival sources include (1) those tools that university archives such as the Archives of Scientific Philosophy use; (2) open tools such as PhilArchive where everybody might upload electronic documents; (3) tools being tailored for the presentation of the material of a specific origin such as the papers of Ludwig Wittgenstein. All these tools have in common that they are more or less strictly document oriented. They do not mirror the physical structure of an archive but rather store documents that form a particular unit of metadata. This approach could be fruitful, if the processing of the documents might be rather well developed and the metadata might be clear and transparent and sufficiently complex.

However, the problem is that most of the existing tools cover only rather rudimentary metadata, and, in the case of the tools being used by public archives, the problem is often that they hardly process single documents as forming a logical unit of some kind (e.g. letter from Otto Neurath to Rudolf Carnap from December 26, 1934) but rather focus on those units being naturally provided by the archive, viz., folders that contain, e.g., several letters from Carnap to Neurath from the years 1923 to 1929 and sometimes might also include further material that does not directly relate to the main theme. In cases like that, complex metadata may not be possible at all, simply because the document units are too vague.

An archive oriented presentation might be helpful

In cases where a digital archive only covers rudimentary metadata and rather ambiguous documents, it might be most helpful to include a presentation of the digital material that represents the physical structure of an archive. Archives typically structure their material into collections and subcollections, shelfs, boxes, folders, and the like, and the finegrained structure of this organization of the material very often already represents a certain order, e.g., distinguishes between manuscripts and correspondence, puts some chronological order to the material and/or picks out certain topics or correspondence partners. Even if such an order is quite inconsistent and also covers pure chaos at times, users of an archive usually are able to use this order in a mnemotechnical way, often supported by useful finding aids that exist for an archive. Therefore, the most obvious way to make electronic archival sources more transparent and usable would be to add a perspective on the material that mirrors the physcial structure of the archive.

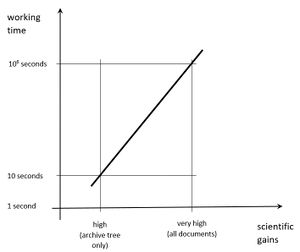

The logarithmic scale of archival work

Note also that representing an archive in the way in which it is physically organized also yields a very strong pragmatic benefit. If a digitization of an archive is stored in folders that mirror the structure of the archive, then in VALEP one may upload the entire material, basically, with a single mouse click. The archival work being involved here is close to zero. On the other hand, if this archive is really large as, for example, the Schlick papers in Haarlem that comprise some 50,000 pages or the Carnap papers at the ASP that might come close to 100,000 pages, then one might expect that a carefull processing of the documents that belong to this archive might possibly need to process several tenthousands of documents. If an archivist, say, processes 20 documents per hour, this might amount at several years of full time work of an archivist. So, we compare here two extremely different points on the scale of gains divided by working time. Most of the available archival resources will never become processed into a fine grained document structure, for mere lack of time and financial resources. However, these sources might still be made available, at the level of representation of the physical structure of the source.

Adequate metadata are important

Metadata can be needlessly complex and confusing. A careful selection is important. This includes that a document should be associated only with these metadata categories that might become relevant for it. Only a letter, for example, has a receiver or a place of posting, whereas a manuscript unlike a published book or article may not offer any publication data. So, one important aspect of making making metadata adequate is to restrict documents of a certain category to those metadata categories being relevant here.

But metadata should also be carefully selected, regarding their format. This holds, in particular, for key metadata such as date and location. Dates should be able to cover not only (several) single days but also entire months or years, and date ranges, e.g. from December 24 1924 until October 1930. This allows one to cover also cases where the date of a document is not sufficiently localized or where a document was produced over a longer period and/or at different days or years. Locations, on the other hand, should become embedded into the mereological structure of geography. That Vienna, for example, belongs to Austria and Europe but also to the Habsburg Empire and the German speeking world, is a fact that is not easily to be reproduced but is needed in order to pick out all Viennese locations, if one filters documents from Vienna, Austria, Europe, the Habsburg Empire, or the German speeking world. Finally, in many other cases, e.g., regarding persons, institutions, languages, a consistently searchable and filterable layout is easily obtained if the database uses relational features and stores these items in certain predefined lists or tables. References to these predefined resources, however, should typically be optional, in order to keep the structure of a database as flexible as possible.

Documents might have instances (versions, chapters) being spread over different archival sources

An important aspect of the integration of several archival sources is that documents tend to be located not only in one folder/box that belongs to collection X. Rather, the following holds quite frequently:

- There is the original document being located in archive X, whereas copies are to be found in other archives, e.g., blueprints of an letter being kept by the sender (which might contain relevant information that the original letter does not provide)

- There are written duplicates of a document, transcriptions, and translations as well as commentaries that lie at very different archival locations.

- Finally, a document might disintegrate into several parts or chapters that, in turn, might be spread over different archives (some of them might be the orignal source, some might be copies, written duplicates, transcriptions, etc.)

A note on copyright

The international copyright situation dictates that it is unproblematic, in principle, to make all varieties of metadata openly available, whereas facsimiles may be published online only if (α) the copyright was granted to the publishers by the copyright holders, or (β) there is a legal situation that allows publication without explicit transfer of copyright. (β) falls into two typical case types: (β-1) publication of a document is possible if all involved authors died at least 70 years ago, which makes the material public domain; (β-2) publication is possible if the publishers can prove that the copyright holders could not be identified though the publishers tried to find them in several reasonable ways.

Keep material internal as long as the copyright issues could not be positively resolved

Another logarithmic scale is emerging here. It is often quite easy for big figures such as Carnap, Reichenbach, or Quine, to get copyrights granted for everything they wrote. But in their papers there is also a wealth of material that was written by others - letters TO Carnap, Quine, Reichenbach - or touches upon privacy rights of others - when Carnap talks in a letter ABOUT a person X. To solve all the copyright issues that emerge in a huge Nachlass might become a tedious and almost unmanagable task. Thereofore, it might be desirable that a an database enables to deal with these issues in a maximally flexible way. Material might be either kept internal in its entirety - metadata plus facsimiles - or it might become published (because metadata are unproblematic, in principle) but without public access to the facsimiles. Moreover, it should be possible to restrict access to the internal level of a database to those parts of the material that the account holder is allowed to see.

Desirable Features

Along the lines of these considerations, the following features would be desirable additions to the typical coverage of existing archival tools:

- To cover the physical structure of an archive (in order to serve the mnemotechnical skills of researchers and make existing finding aids more useful)

- To provide parts with high gains and low costs first and add the rest -- very high gains and very high costs -- only in these cases where the existing resources make this possible

- To provide a flexible handling of metadata categories that tailor them to the required document categories

- To ensure that critical metadata categories such as date and location use a most flexible, consistent and transparent format, together with suitable parsing tools (that avoid inconsistent entries)

- To implement other critical metadata via predefined lists and tables in a relational database setting, while keeping data fields optional whenever possible

- To provide suitable tools that enable the processing of decentralized documents that disintegrate into several versions and chapters

- To enable keeping parts of published material restricted - access to the metadata but not to the facsimiles - or even keeping metadata and the facsimiles internal, as long as copyright issues remain unsolved

VALEP offers them

Indeed, the aforementioned features are all offered by VALEP. The design of this tool was from the beginning centered around the idea of combining representation of an archive via its physical structure with representation via documents. The rest of the innovative features of VALEP in part directly followed from this key idea -- this is true, for example, for the implementation of versions and chapters who somewhat intermediate beteween (general) documents and archives --, and in part dived into the conception on the basis of feedback from archivists and the designer's own experience at the archives.

Who can use VALEP?

VALEP is available to everybody and it's free of charge in all its varieties. Typical users of VALEP might include:

- Public and private institutions that house material on the history of Logical Empiricism and want to use VALEP as a tool that helps them to distribute their sources and integrate them with other relevant material

- Private persons that hold collections being relevant for the history of Logical Empiricism and want to use VALEP not just to distribute and integrate their sources but also to safeguard them for the future

- Researchers from all over the world who digitized material in the archives and are willing to share this with others and/or want to use VALEP as a tool that allows them to process and better organize their sources

If you are interested in using VALEP as an institution, private person, or researcher, please contact Christian Damböck.

Future prospects

The recent (and first) version of VALEP was developed in 2020/21. Until fall 2021 we plan to implement, among other things, the following additional features:

- Persistent links to all documents, versions, files, and nodes of the archive tree

- Possibility to assign DOIs to general documents

Features to be implemented in 2022 (preliminary list)

- Integration of Phaidra: each published VALEP object becomes stored in Phaidra

- The possibility to selectively restore deleted VALEP objects

- Possibilities to mark objects in VALEP with flags, together with advanced filter tools

- Bundles of documents can be loaded to the file viewer

- The sequence of jpgs that is loaded to the file viewer can be downloaded as a pdf

- In the internal view (construction site) the nested content of any node of the archive tree can be downloaded to the local computer

- For each node of the archive tree the number of files that belong to this node becomes displayed

If you found any bugs, want to report shortcomings of VALEP or point to desired features, please contact Christian Damböck.